At Daedalus, machine vision is more than just a fascinating technological advancement—it plays a crucial role in the solutions we research, design, and engineer. By integrating machine vision systems into our projects, we’re able to enhance precision, automation, and data analysis for projects across various industries. This allows us to create smarter, more efficient products that solve real-world problems. Our commitment to staying at the forefront of such technologies ensures that we deliver innovative and cutting-edge solutions to our clients.

Machine vision is a subfield of computer vision that enables computers to interpret and understand visual information, much like human eyes and brains do. It involves using cameras, sensors, and software to detect, analyze, and process visual data, and it plays a vital role in AI, robotics, and automation.

One of the core challenges in machine vision is enabling computers to perceive depth—the distance between objects in their view. Understanding how humans perceive depth helps us build similar systems for computers, which leads us to explore stereo vision technology, a method inspired by our own biological depth perception.

With that said, let’s first go back to where machine vision got its inspiration: humans, and our eyes!

How do humans perceive distance?

For people, estimating the distance of objects is so automatic that we almost never think of it in our daily lives. So what goes on in our eyes and in our brains when we do this essential task?

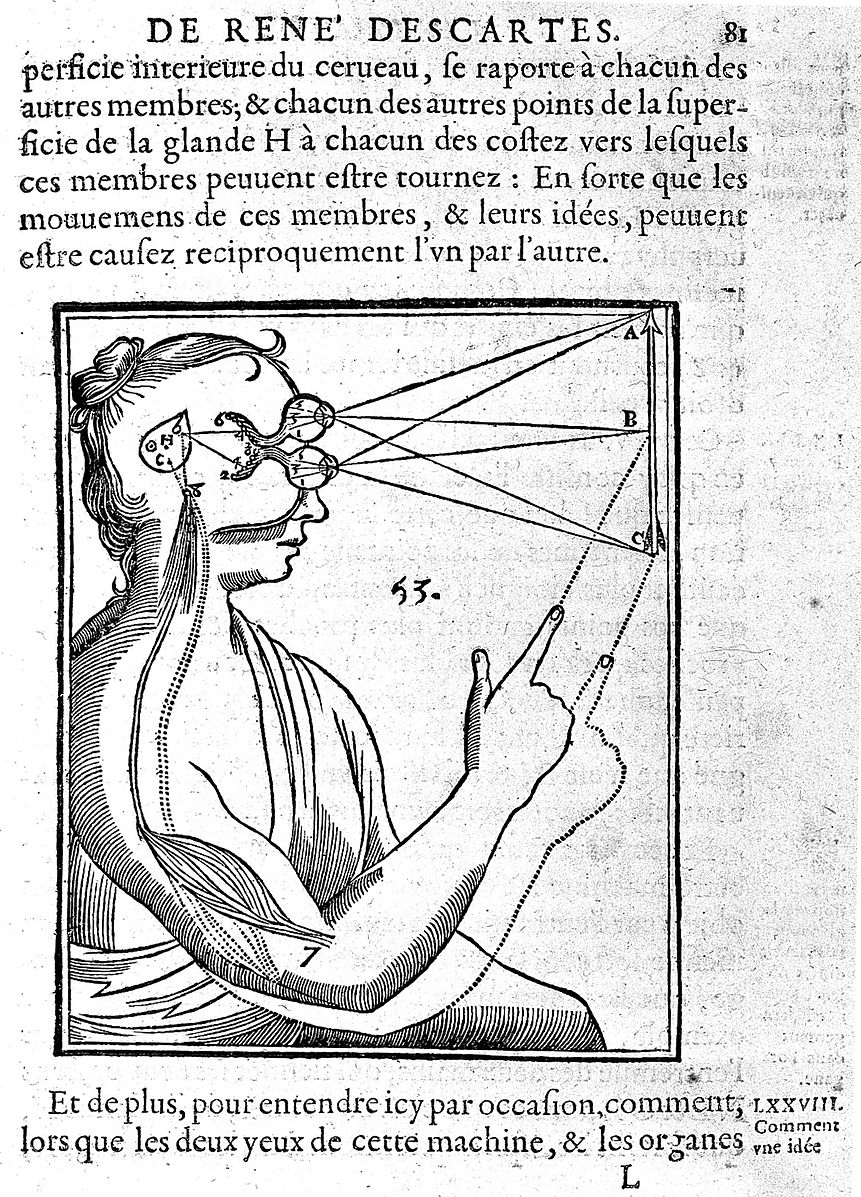

This has been a topic of interest for scientists and philosophers going back millennia. Descartes, a philosopher and mathematician from the 1600s known for inventing the Cartesian x-y plane, believed that when people look at an object in their field of view, they can figure out the distance of the object “by the relation which the two eyes have to each other.” That is, by using the angle that the eyes form as they move inward or outward to see nearer or farther objects, we unconsciously, “as though by a natural geometry,” estimate the distance of objects from us (Dioptrique 140-41).

This idea from hundreds of years ago that it’s the combination of information from both eyes is intuitive today. If you cover one of your eyes, you’ll generally find that it’s harder to perceive depth. But where does the actual calculation take place?

Descartes definitely didn’t have the scientific resources we have today to give a hard answer, but he thought that there must be some sort of sensory organ that receives images from both eyes, and that this organ unconsciously does this distance estimation. He believed this to be a part of the brain called the pineal gland, which, since he thought it was so important for processing sensory information, must even be the “principal seat of the soul, and the place in which all our thoughts are formed.”

Modern neuroscience has debunked this particular model of perception—now we know that visual information is processed at a part of the brain called the occipital lobe, and is actually divided into left and right halves.

Despite his theory being off, you’ll see next how Descartes’ model is closely related to computational depth perception.

How do computers perceive distance?

Computer scientists and engineers have taken inspiration from centuries of research into the human vision system to design computer vision systems.

One of the main strategies for computational depth perception is remarkably similar to Descartes’ model. Instead of two eyes connected to a gland of the brain that combines the information, processes, and forms a guess about what’s happening, scientists and engineers use two cameras connected to a computer. The cameras serve as the eyes, and the computer as the brain. These are called stereo vision systems.

Stereo vision-based depth algorithms rely on the left and right images from the stereo cameras as well as information about the cameras themselves and their positions relative to each other to determine depth. Algorithms of this type have been researched for decades and are called stereo depth algorithms.

How Stereo Depth Algorithms create “Depth Maps”

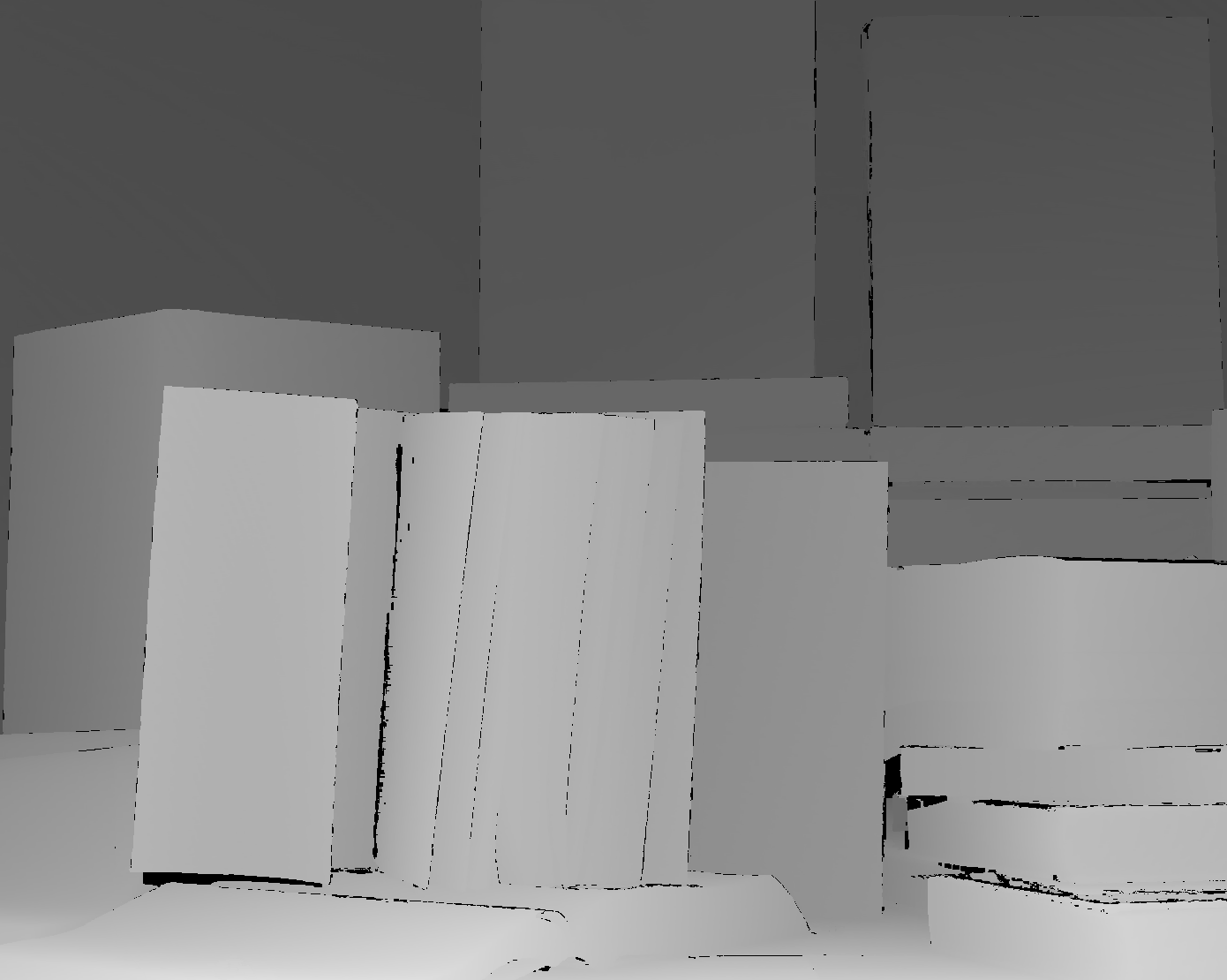

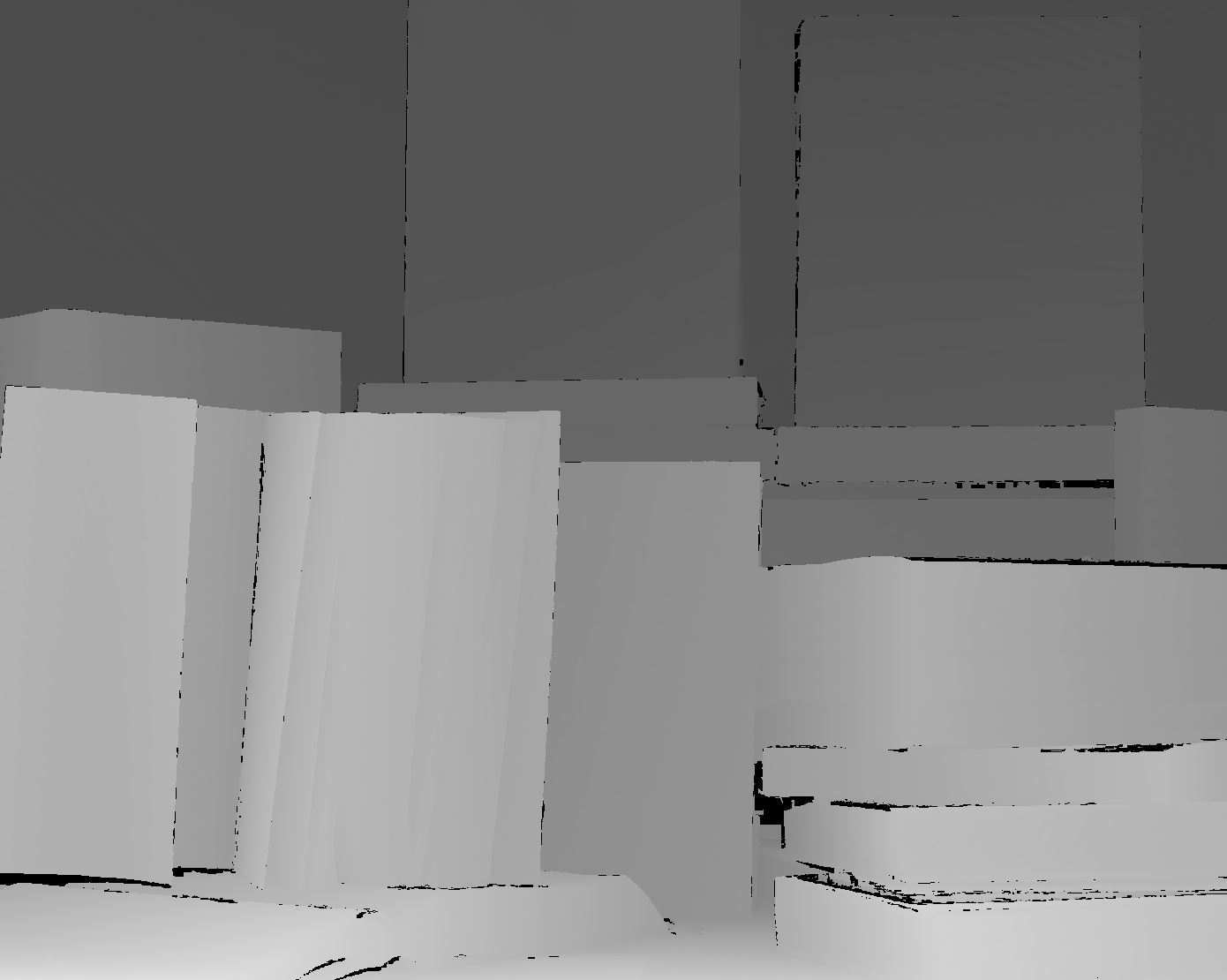

Stereo depth algorithms take in two images from horizontally aligned cameras (stereo cameras) and output what is called a “depth map,” which is a black-and-white image where each pixel value represents how far the object at that pixel is from the cameras.

| Left Camera | Right Camera |

|

|

|

|

Images taken from H. Hirschmüller and D. Scharstein. Evaluation of cost functions for stereo matching.

In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2007), Minneapolis, MN, June 2007. https://vision.middlebury.edu/stereo/data/

The steps to creating a “depth map” are as follows:

- Rectification of images: Rectification is the process of undistorting images and aligning them so that objects seen by both cameras are on the same horizontal line.

- Computation of matching pixels: Once the images are aligned, the next step is to find out matching pixels between the left and right images. That is, we want to find which pixel in the right image corresponds to a pixel in the left image, for every pixel in the left image. For example, if I have left and right images of a dog, and I have a pixel in the left image that corresponds to the end of the dog’s tail, then I’ll have to find the pixel in the right image that corresponds to the edge of the dog’s tail. Stereo vision algorithms use a variety of techniques to compute matching pixels.

- Calculation of disparity: Once we know the matching pixels, we obtain the disparity by subtracting the x-coordinate of the position of the pixel in the right image from the x-coordinate of the position of the pixel in the left image. The result will be an array (which can be visualized as a black-and-white image) the size of our image(s) that contains the pixel-wise disparity between the left and right images. This array is called a disparity map.

- Filtering or fusing with other inputs (optional): Post-processing filters can be applied, and/or the disparity map can be fused with other inputs such as IR projectors to enhance the depth map. Possible enhancements include reducing noise, filling in missing values, and clarifying edges of objects.

- Translation to real-world coordinates: Using the baseline, which is the distance between the two cameras, and the camera’s calibration data (which is also used in Step 1., and isn’t discussed here since the calculation of such data happens prior to execution of the algorithm), a mathematical operation is applied to each pixel in the disparity map, transforming from pixel coordinates to real-world coordinates, e.g., meters. We end up with a depth map of real-world distances of pixels (or the objects they represent) from the stereo cameras.

Improving computer vision’s accuracy over time

While Descartes thought people used a type of “natural geometry” to estimate depth, computers instead use actual geometry (and trigonometry, optimization, and other mathematical techniques). And just like how biological scientists have continued to study human vision and develop more sophisticated models, computer scientists have continued to study computational stereo vision.

Us humans aren’t estimating depth freshly every time we blink—we’ve learned over our lifetimes how to improve our depth perception. But for the past decade or so, computer scientists have been researching ways to use machine learning and deep learning to improve the accuracy and reliability of stereo depth algorithms.

Two main approaches computer scientists attempt to improve stereo depth algorithms’ accuracy are:

- Using machine learning to determine parameters that go into an existing stereo depth algorithm based on scene characteristics or other variables

- Using machine learning to come up with a better model to compute matching pixels directly

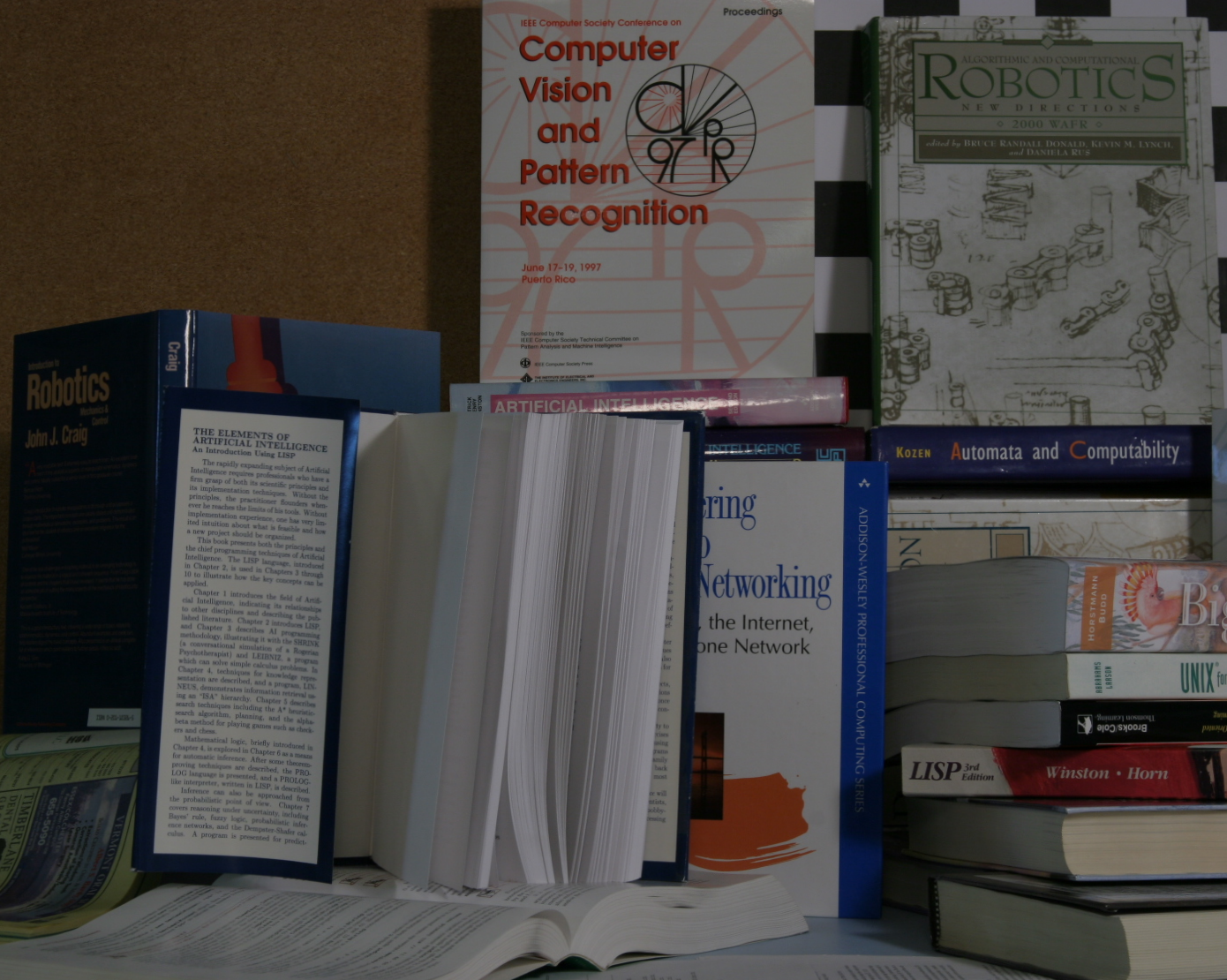

Traditional stereo vision algorithms usually determine the best (right) match for a (left) pixel based on a combination of pixel brightnesses from a window of nearby (left) pixels, but the machine learning algorithms in the second approach use a learned model to determine these best matches. Scientists are investigating ways to improve the speed and generalizability of these models, which are often trained using standardized, publicly available datasets that only have stereo images with a particular baseline.

All of this begs another question, though.

Is it possible to judge depth with just one camera?

To answer this question, let’s turn again to human vision.

Monocular depth

Seeing with one eye is called monocular vision. Biologists know that it is possible to judge relative depth of objects just using monocular vision. That is, given several objects in our field of view, we can use context to determine which of them are closer or farther away. We use cues like whether one object overlaps another (judging the overlapped object to be farther away) and linear perspective (we know that parallel lines converge at a far distance). Monocular depth cues are heavily rooted in our past experience and familiarity with similar objects and situations. (It still takes two eyes on the same plane to make precise depth judgments without relying on the position of other objects in our fields of view.)

Likewise, as computer scientists begin to investigate monocular depth estimation, they look to machine learning to help computers learn from past experience how to judge depth from a single image instead of two. These techniques are much newer in development compared to stereo depth techniques, which have been researched for decades and don’t have to rely on machine learning.

Machine Vision at Daedalus

At Daedalus, we’re not only fascinated by the improvements in technology like this over time – but we also use machine vision in several projects we work on day-to-day. From data collection, to training an AI model, to analyzing results of projects over time, tools like stereo vision and stereo depth algorithms play a large part in research, design, and engineering efforts.

Are you looking for the right design firm to help with your next software development project, or need a vendor familiar with using software for tracking project success? Get in touch to see how we can help.