As a part of this year’s World Interaction Design Day, the Daedalus UX team attended an Abusability Testing Workshop, organized by our local IxDA Chapter. World Interaction Design Day is an annual event in which members of the global design community come together to illustrate how interaction design improves lives. This year’s theme was “Trust and Responsibility”, and the Abusability Testing Workshop’s goal was to highlight how technology made with even the best of intentions can be used for nefarious purposes.

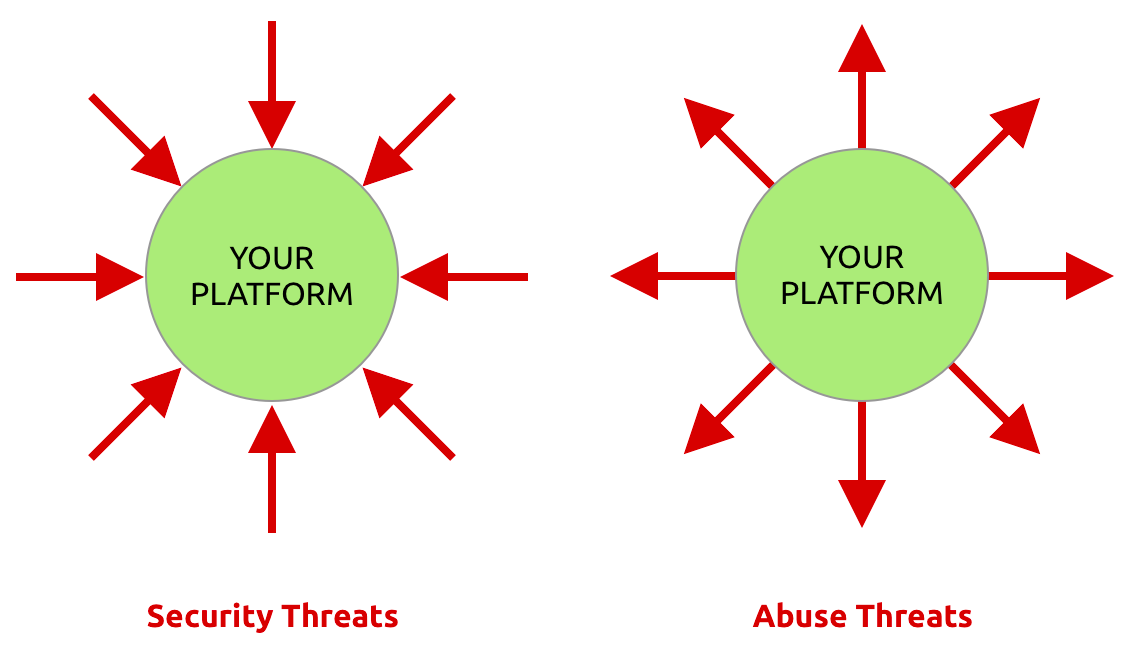

Different from security attacks that come from outside of your system, abusability represents a weaponized use of your product or platform.

The problem has existed for years, but the recent exponential propagation of misinformation across social media has clearly illustrated the vulnerabilities of these platforms to abuse by those with disingenuous intentions.

Hosted by Anna Abovyan and Theora Kvitka, M*Modal User Experience Manager and Design Lead (respectively), and Allison Cosby, ShowClix Product Manager and UX Specialist, the Workshop started with a discussion of what abusability means, and a clip from Netflix’s dystopian science fiction show, Black Mirror, illustrating the issue. Imagine living in a world where your status in life relies on the ratings that you’ve been given by other people, even strangers, in everyday interactions. In this particular scene, a young woman starts encountering stressful situations on her way to a wedding, and because of her less-than-ideal responses, her social ranking begins to fall and quickly devolves into a downward spiral as she continues to become more anxious and stressed.

Participants in the workshop then broke up into smaller groups to produce their own Black Mirror-esque scenarios. Each group member came up with an emerging technology that they were particularly interested in and the benefits it provided. Other members of the group then crafted ways in which that technology might be abused. My group dove into the not-too-distant future of autonomous drone delivery, which can help people get products quick and easily. We brainstormed ideas on how it could end badly, including an abuse scenario that led to the arrest of an innocent customer.

Later each break-out group shared their fictional dystopias and the entire assembly ideated on ways to mitigate the imagined abuses. As a group, we also reflected on how integrating technology into our everyday lives has brought on unintended consequences, like ‘smart’ thermostats turning houses into freezers, baby monitors spying on families, and how social media networks make us more divided and polarized instead of bringing us together.

The Abusability Workshop clearly illustrated the need for companies and designers to be more proactive, rather than reactive, to abuses of our platforms. Often, we are so focused on getting the design created and built for the benefit of anticipated uses, that we may forget to anticipate how someone with fraudulent goals might misuse it, or even how it might react in unexpected situations. Trauma assessment and scrambling to contain the damage after an abuse scenario has been perpetrated is no longer good enough (if it ever was).